Don't Guess What The Problem Is

When things go wrong in production, it’s easy to fall into the trap of guessing! I’ve been there too. But guessing can lead to wasted effort, worse technical debt, and more confusion. In this post, I share what I learnt to do instead!

When a critical incident happens, it's a natural reaction to start guessing (and second-guessing) what the problem is. Over the years, I've personally had [and seen] these kinds of reactions more times than I can count 😄

Maybe it's this non-optimized code that we initially decided not to prematurely optimize

I think it's because I'm not caching this intensive computation; I initially thought it would be fine but maybe I was wrong

It's probably that database index I thought we will not need, maybe we do

I knew upgrading this was a bad idea, I mean it passed all tests and QA but for some reason I guess we missed something

This is counter-productive! Please don't do that.

Why Guessing Is Counter-Productive

First you must understand that you and your peers are competent engineers. If you carefully considered something in the past and decided it'd be a non-issue, you were most likely correct.

Having an incident now does not change that. The root cause is likely something else.

Now let's be real: if you were capable of guessing what the root cause is, you wouldn't have allowed it to happen in the first place. Therefore, you are NOT in a position to make an accurate guess in this situation (and that's okay).

Guessing often leads to rushed efforts [like prematurely optimizing code, adding caches, etc] to fix what you think might be the problem. This will

- Consume tens [if not hundreds] of work hours. After all, you previously discarded this work for a reason.

- Add technical debt since you'll likely be doing this work under pressure so you're not in a place to make well-thought-out considerations.

- Most likely not solve the issue which will lead to further desperation, more self-doubt, and even lower-quality guesses at what the problem is.

What To Do Instead

Step 0 - Relieve the immediate pain

"Start by relieving the pain, then treat the cause." ~ Ibn Sina (father of early modern medicine)

Users care most about being able to use the product they signed up for. Start by throwing more servers into production, upsizing that database, rolling back that upgrade, anything to get service back up as soon as possible even if it's expensive for the first few hours.

At this point, the root cause is mostly irrelevant. The faster you get service back up, the less your users would be annoyed and the faster everyone would be able to get their stress levels back under control 😄

Step 1 - Make verifiable assumptions and verify them

Understand that software is deterministic; things don't "just happen". Everything has a completely logical reason, performance doesn't "decide" to degrade, bugs don't "choose" to happen and no, the server doesn't "know" that you're looking at it 😄

When making assumptions, you must intentionally govern your thought process and avoid these common pitfalls

- Do NOT make speculative assumptions. Assumptions must be based on fact NOT on other assumptions. Verify your assumptions before building other assumptions on them.

- Here is an example: Maybe response time is slow because the database server is overloaded. I think it can be overloaded because a new non-optimized query we started using yesterday can potentially be increasing its CPU usage causing it to choke and be unable to effectively answer my small query. I'll optimize the query we added yesterday and that might resolve the issue

- This chain of thought involves

- an assumption that the database is actually the culprit

- Based on another assumption that it is overloaded

- Based on another assumption that the non-optimized query is the culprit

- After spending an afternoon optimizing that query, making sure it uses an index and after hours of hard work, the problem is still there.

- In this particular case, it could've been that the database is returning a lot of data on the wire and the runtime is struggling with deserializing it. The database server itself is not overloaded, the runtime is. The optimized query wasn’t harmful, but it wasn’t necessary either; just as originally predicted 😄

- Assumptions MUST make sense. If you have to bend reality and break a few rules for your assumption to make sense; it probably isn't sound

- Here is an example: Maybe that code is very inefficient for some users. I tried replaying some of those requests for the same power-user and it did indeed run variably slowly for that user 90% of the time. I also kept an eye on CPU usage and it indeed spiked during those 90% of times. I can't explain that 10% but maybe it's just an outlier?

- In here it was assumed that

- an inefficient block of code is the culprit

- testing for a particular user indeed ran slower than normal 90% of the time.

- The assumption was even verified by looking at CPU usage and it indeed spiked!

- But the unexplained 10% was brushed aside. Confirmation bias kicked in and the assumption was forced to fit by bending reality a little bit and dismissing the 10% as an outlier or the server just being "moody" 😄 The code was optimized only to discover that the issue still persists 89% of the time.

- Remember, software is deterministic and things don't "just happen". If the assumption doesn't make rock-solid sense, you're probably not looking at the right place.

- In this particular case for example, the problem could've been that a completely different area of the code is memory inefficient which causes the server to use swap memory. Depending on the time the test was run, the server was heavily swapping which caused the code to be extra slow. When the server was not swapping things were super fast. In hindsight, the original call not to optimize the code wasn’t off; the real issue was elsewhere 😄

- Share your assumptions with your team and verify them together. If you keep your ideas to yourself, it's more likely that the team is missing out on a good idea that you have or that you're trapped in your own logical bubble. Sharing is the best shortcut to

- exchange knowledge and scrutinize what doesn't make sense

- understanding if the idea was explored before

- crowd-sourcing ideas to come up with new assumptions or verify existing ones (some assumptions are not as easily verifiable as other assumptions)

Step 2 - Do NOT fix without evidence

Before investing effort into a fix. Make sure you have concrete evidence that what you're fixing is actually the problem. You'll have that evidence if you did Step 1 well enough 😄

If you're tempted to try a quick fix, that may be fine as long as it doesn't take more than 20 minutes of work. Otherwise, you're acting blind, making your own life harder and getting slightly demoralized with every failed fix!

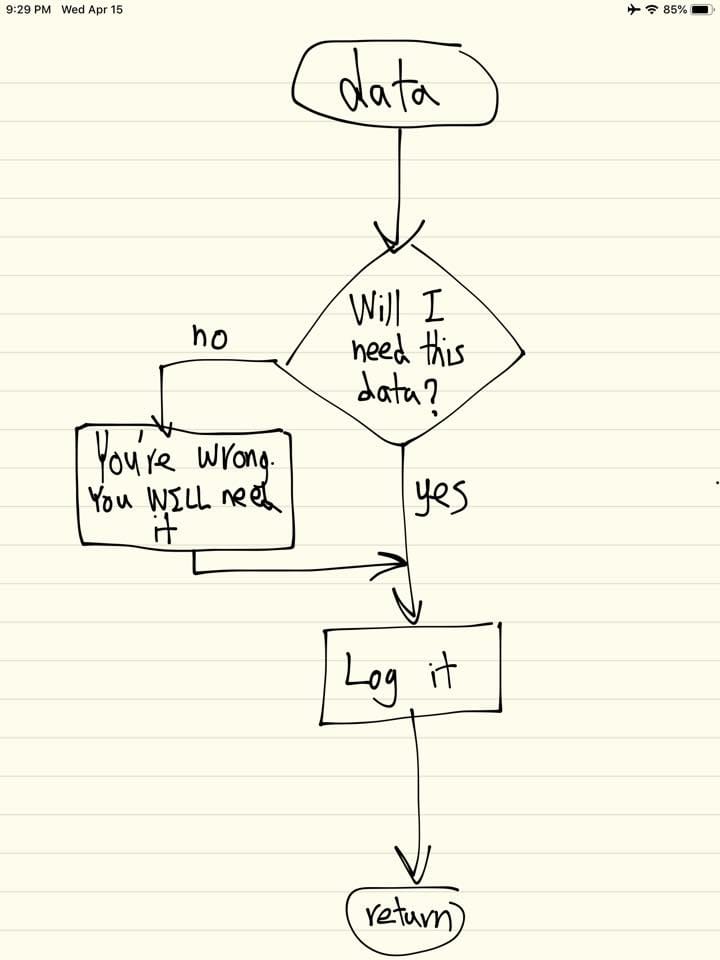

Be Prepared Ahead Of Time

This whole process is an art and it rests on you and your team being able to

- Make high-quality assumptions

- AND Verify them

If you're not able to make high-quality assumptions, you cannot move forward.

If you're able to make good assumptions but can't verify them, you're not doing better than guessing!

Here're some tips to ensure you're prepared:

- Understand the technology you use and how it works really well; do not settle for "Oh well I guess it works" level of understanding.

- Tip: Research and/or Ask AI about the runtime metrics for whatever tech you use and have it explain what they represent and how they affect performance and reliability. Do NOT settle for mediocre understanding, if you can't explain it to a 6-year old kid, seek deeper understanding.

- Be ready to get proven wrong; none of us know everything, we're all learning different aspects of the systems we run every day so keep an open mind but scrutinize what doesn't make sense. Who among us hasn’t made a guess under pressure that led us astray? This process works best as a team effort so keep each other grounded 😄

- Make sure there's proper telemetry and observability in the things you run.

- Understand the systems collecting this telemetry, how they work, and what their limitations are. Some issues simply cannot be measured by particular tools and using them is unhelpful at best, misleading at worst.

Finally

This process is an art. The more you do it, the better you'll get at it and you'll even develop an intuition on how to make good assumptions 😄

It might seem less productive since more time is needed before attempting a fix but it actually isn't since it ensures two important things

- Little time is wasted on unneeded work

- The root cause would be reached in bounded time rather than after endlessly guessing

Evidence beats intuition every single time.

I'm curious to hear what you think of this process and how you approach critical incidents with unknown causes, let me know what you think 🤔

![[Joke] How To Travel To A Programming Language](/content/images/size/w600/2025/07/a5ed598b-de86-4b4c-ae56-46048e1eb026.png)